Would you like to control the TV using your voice without spend a lot of money? ... Amazing right?. So, in this post, I will teach you how to do that and more.

Some of my dreams always have been control things without touch them, for example: the television, due to tired to raise the hand to change the channel. So ... let's create a device that can do this action automatically.

What things will we need?

First, I should understand the problem and be aware about it. For example: if we want to control a TV that is not smart, how will we do that? ... a possibility is to send infrared signals (IR) to transmit the events that the person's desire.

Also, if I want that the device can hear me, I may need a microphone. Additionally, it should have a speaker to talk with the people.

Further, I will need a database to save all the information, APIs that can help me with the smart logic and cheap electronic components like a Raspberry Pi, resistors, leds, wires and a protoboard.

TVs Interaction

To control a TV that is not smart could be difficult. In this occasion, I will use infrared signals (IR) to interact with the television. So, I need to research more about it.

First, you need to know what is infrared. Infrared radiation is a type of electromagnetic radiation, it is invisible to human eyes, but people can feel it as heat. It has frequencies from about 3 GHz up to about 400 THz and wavelengths of about 30 centimeters (12 inches) to 740 nanometers (0.00003 inches).

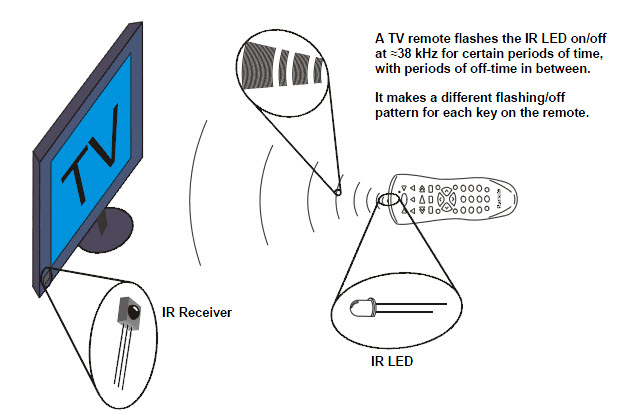

A TV remote control uses IR waves to change channels. In the remote, an IR light-emitting diode (LED) or laser sends out binary coded signals as rapid on/off pulses. A detector in the TV converts this light pulses to electrical signals that instruct a microprocessor to change the channel, adjust the volume or perform other actions. IR lasers can be used for point-to-point communications over distances of a few hundred meters or yards.

In our case, I have created a circuit to connect it with the Raspberry Pi. It can record the IR signals to each event and save them on a data base. Also, the circuit has a IR transmitter to send events to the TV.

Audio Processing

As you know, our ears enable to understand what people are saying. So, if we want that our device can take actions by commands voice, first ... we should analyze the audio.

The audio processing is so hard, due to process is need it to take into account the different accents, the context, the noise, the local region and others. Currently, there are many companies as Google and IBM that are using Deep Learning to create sophisticated models that can transform the audio to text with a considerable confidence.

In this project, I used the Google Assistant SDK. It is a powerful framework that has Google, and can process the audio with a high confidence, support several languages, has low latency and can be integrate with a lot of devices. Another service that I used was the IBM Speech To Text (STT) tool (demo).

In the other hand, the device should have the talk skill, so is necessary that the device be friendly, like simulate it talking. But also, it is difficult, due to voice can sound a few weird like robotic, however, using Deep Learning we can create phonemes that sound more like humans. To make my life easier, i used text to speech services such as Google TTS and IBM TTS (demo), that can convert the text to audio and then I played it using VLC on the Raspberry Pi.

Natural Language Processing

In this point, we have a module that convert the text to audio and other that pull out the text from the audio, but what will we do with these text?. We will need something to get the intent and understand what the person want to do, right?. So, let's use Machine Learning and Natural Language Processing to solve this issue.

Some of there applications that I used were the Chatbots. Actually, there are many frameworks that enable to create Chatbots, for instance: Dialogflow (previously was called API.AI by Google), Watson Assistant (formerly was called Watson Conversation by IBM), Wit.ai (Facebook), Microsoft Bot Framework, and more. All of them are powerful tools to implement, however, I used Dialogflow, despite of Watson Assistant showed better accurate results (in some tests done in the past), it is free and it has amazing features.

So, I trained several intents and entities in Dialogflow, to analyze the text, allowing make decisions with the intents and the parameters detected on the Raspberry Pi, for instance: to identify if the person wants to change the channel and which channel; or the person likes to watch the next channel or she or he needs more volume on the TV. I also used context variables to take decisions about something previously mentioned.

Some of there applications that I used were the Chatbots. Actually, there are many frameworks that enable to create Chatbots, for instance: Dialogflow (previously was called API.AI by Google), Watson Assistant (formerly was called Watson Conversation by IBM), Wit.ai (Facebook), Microsoft Bot Framework, and more. All of them are powerful tools to implement, however, I used Dialogflow, despite of Watson Assistant showed better accurate results (in some tests done in the past), it is free and it has amazing features.

So, I trained several intents and entities in Dialogflow, to analyze the text, allowing make decisions with the intents and the parameters detected on the Raspberry Pi, for instance: to identify if the person wants to change the channel and which channel; or the person likes to watch the next channel or she or he needs more volume on the TV. I also used context variables to take decisions about something previously mentioned.

Total Integration

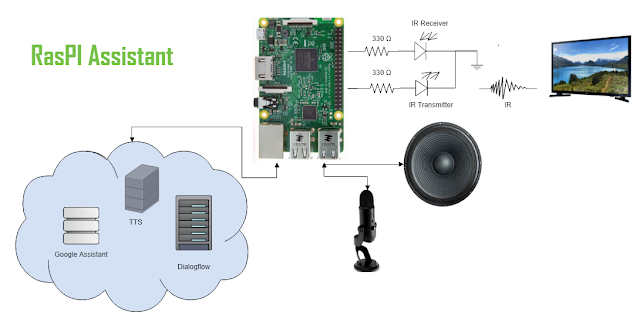

Here we go to the best part ... in which I connect the entire modules in only one project. First, I created a circuit to record and send IR signals, then I connected it to the RPI and developed a python module to do that.

Additionally, I connected a microphone and a speaker to the RPI (I used a USB adapter). Then, I downloaded and installed the Google Assistant SDK on the embedded system, testing that the voice commands were translated to text. Next, I created a chatbot on Dialogflow to send the previous text and identify what the user really wants to do.

Using the response, I converted the text response to audio (TTS) and played it on the RPI with VLC. I used the intents and parameters to send the correct IR command so it interacts with the TV. The application has the ability to do several things with the TV, for instance: turn on/off, mute/unmute, change a channel by number, next and previous channel, remember the last channel, modify the volume and more.